The Holistic Evaluation of Language Models (HELM) serves as a living benchmark for transparency in language models. Providing broad coverage and recognizing incompleteness, multi-metric measurements, and standardization. All data and analysis are freely accessible on the website for exploration and study.

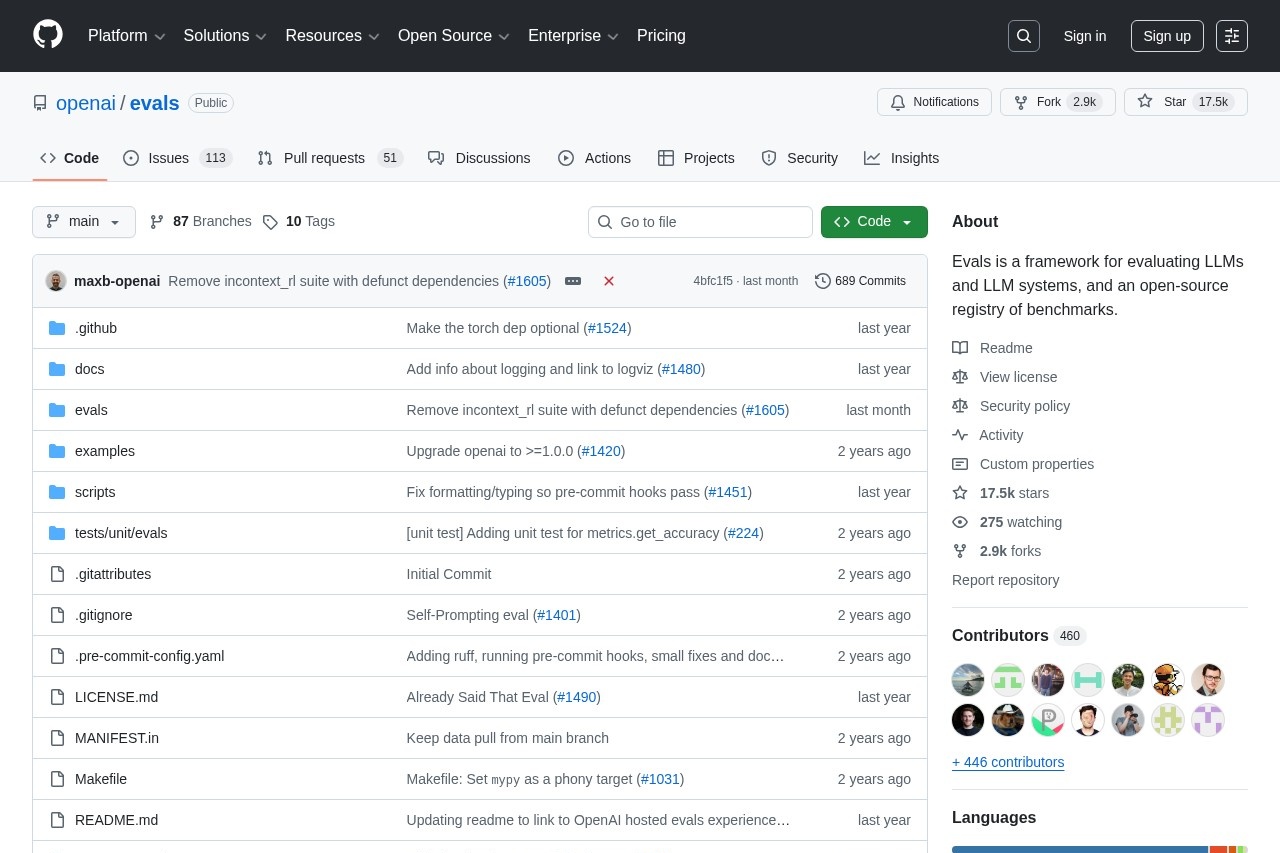

OpenAI Evals remains the leading open-source LLM evaluation framework in late 2025. It features a comprehensive registry of benchmarks, easy YAML templates for custom creation, model-graded scoring, and secure private testing—perfect for reproducible LLM evaluation without data exposure.

DeepEval leads open-source LLM evaluation in late 2025, providing Pytest-style unit testing with 50+ advanced metrics, custom G-Eval, synthetic dataset generation, and red teaming—all fully free locally. Seamless for RAG, agents, and chatbots; Confident AI cloud adds monitoring and collaboration.