MiniMax Goes Public on HKEX: Raises Over HK$5 Billion in Record 4-Year IPO Sprint, Shares Surge 50% on Debut Day

On January 8, 2026, Chinese AI unicorn MiniMax made history with its blockbuster Hong Kong IPO, raising more than HK$5 billion (≈US$640 million) — the fastest path from founding to public listing among major Chinese AI companies in just four years. Priced at the top of the range, the stock (ticker: 9898.HK) opened 38% higher and closed up 50% on debut, valuing the company at over HK$45 billion. The explosive first-day performance reflects surging global appetite for frontier AI infrastructure plays and cements MiniMax as the hottest AI IPO of the post-ChatGPT era.

Moore Threads Releases SimuMax 1.1: Open-Source Distributed Training Platform Evolves into All-in-One Powerhouse with Visual Config & Intelligent Parallel Strategy Search

On January 8, 2026, Moore Threads officially launched SimuMax 1.1 — a major upgrade to its open-source distributed training toolkit. The new version transforms SimuMax from a pure simulation engine into a complete end-to-end distributed training platform, introducing visual configuration interface, intelligent parallel strategy auto-search, dynamic resource-aware scheduling, and seamless integration with MTTorch and MTTensor. Early internal benchmarks show up to 42% improvement in training throughput and 35% reduction in configuration time for large-scale LLM training on MTT GPU clusters, making high-efficiency distributed training finally accessible to the open-source community.

Agnes AI Drops SeaLLM-8B: An Open-Source Southeast Asian Language Powerhouse That Outperforms Llama-3.1-8B on Regional Benchmarks

Agnes AI officially open-sourced its self-developed SeaLLM-8B model on Hugging Face today, January 9, 2026. This 8-billion-parameter model, specifically pre-trained and post-trained on Southeast Asian languages (Thai, Vietnamese, Indonesian, Malay, Tagalog, Burmese, Khmer, Lao, plus strong English & Chinese), delivers state-of-the-art performance in SEA-centric tasks. It significantly outperforms Llama-3.1-8B, Qwen2.5-7B, and Gemma-2-9B across most regional multilingual benchmarks while maintaining excellent English capability — all released under Apache 2.0 for commercial use.

Mistral AI Completes €1.7 Billion Series C: ASML Leads with €1.3B Investment, Pushing Valuation to €11.7 Billion and Cementing Europe's AI Champion Status

French AI powerhouse Mistral AI announced on September 9, 2025, the close of its massive €1.7 billion Series C round — the largest ever for a European AI company. Led by Dutch semiconductor giant ASML (€1.3 billion commitment for ~11% stake), with participation from Nvidia, Andreessen Horowitz, DST Global, and others, the deal catapults Mistral's post-money valuation to €11.7 billion ($13.8 billion). This strategic alliance pairs frontier AI innovation with chipmaking expertise, signaling a bold push for European tech sovereignty amid U.S. dominance.

Apple Open-Sources SHARP: Single 2D Photo to Photorealistic 3D Gaussian Scene in Under 1 Second — Free and Ready to Revolutionize Content Creation

Apple Machine Learning Research dropped SHARP on December 17, 2025 — a fully open-source model that transforms any single 2D photo into a metric-scale 3D Gaussian splat representation in less than a second on a standard GPU. Delivering sharper details, higher structural fidelity (up to 40% better on key metrics like LPIPS/DISTS), and real-time novel view rendering, SHARP obliterates multi-image bottlenecks and goes fully free on GitHub. Early tests show explosive potential for instant 3D asset pipelines in games, AR/VR experiences, and digital twins.

NVIDIA Acquires SchedMD: Taking Control of Slurm to Supercharge GPU Cluster Scheduling and Slash Enterprise Multi-Cluster Overhead

NVIDIA announced on December 15, 2025, the acquisition of SchedMD — the primary developer and commercial supporter of Slurm, the world's most widely used open-source workload manager powering over 60% of TOP500 supercomputers and countless AI training clusters. This move tightens NVIDIA's grip on the full AI infrastructure stack, promising deeper GPU-aware scheduling, heterogeneous cluster optimization, and dramatic cost reductions for enterprises juggling massive multi-cluster environments. Slurm remains fully open-source and vendor-neutral, with NVIDIA committing to accelerated innovation while honoring existing customer support contracts.

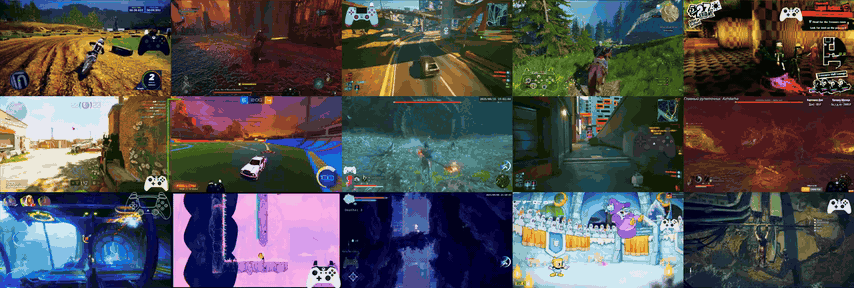

NVIDIA Unleashes NitroGen: Open-Source AI Beast That Masters 1,000+ Games — From Zero-Shot Play to Robotic Revolution

NVIDIA dropped NitroGen on December 20, 2025 — a groundbreaking open-source vision-to-action foundation model trained on 40,000 hours of gameplay across 1,000+ titles. This 500M-param beast ingests raw game frames and spits out precise controller actions, zero-shot handling platformers, racers, RPGs, and shooters. Fine-tuned on unseen games, it crushes baselines with 52% higher task success rates — paving the way for autonomous game NPCs, QA bots, and real-world robots via its GR00T roots. Model weights, dataset, and universal Gymnasium simulator are live now on Hugging Face.

NVIDIA Acquires SchedMD: Taking Control of Slurm to Supercharge AI Workload Scheduling and Slash Multi-Cluster Management Costs

NVIDIA announced on December 15, 2025, the acquisition of SchedMD — the primary developer and maintainer of Slurm, the world's most widely used open-source workload manager powering over 65% of TOP500 supercomputers. By bringing Slurm in-house after a decade-long collaboration, NVIDIA aims to deeply optimize GPU scheduling for massive AI training and inference, enable seamless heterogeneous cluster management, and dramatically reduce enterprise overhead in running multi-vendor, multi-site AI infrastructures. Slurm remains fully open-source and vendor-neutral, with NVIDIA committing to accelerated innovation and continued support for existing customers.

Meta Launches SAM Audio: The First Unified Multimodal Model That Isolates Any Sound from Complex Mixtures with Intuitive Prompts

Meta unveiled SAM Audio on December 16, 2025 — the groundbreaking extension of its Segment Anything family into audio, claiming the world's first unified multimodal model for sound separation. It isolates specific sounds like vocals, instruments, or ambient noise using text descriptions, visual clicks in videos, or time-span markings — alone or combined — all in a seamless, prompt-driven workflow. Open-sourced with small, base, and large variants, plus benchmarks and a perception encoder, it's now live on the Segment Anything Playground and Hugging Face, slashing barriers for creators and accelerating innovations in editing, accessibility, and beyond.

Meta Drops SAM Audio: The First Unified Multimodal Model That Isolates Any Sound with Text, Visual, or Time Prompts — Revolutionizing Audio Editing Forever

Meta unveiled SAM Audio on December 16, 2025 — extending the legendary Segment Anything family into sound with the world's first unified multimodal audio separation model. Supporting intuitive text descriptions, visual clicks in videos, and time-span anchors (alone or combined), it cleanly extracts voices, instruments, or ambient noise from messy real-world mixes in seconds. Open-sourced with small/base/large variants, PE-AV perception encoder, and new benchmarks, it's already crushing competitors on SAM Audio-Bench while powering faster-than-real-time edits — a game-changer for creators, podcasters, filmmakers, and accessibility tools.

Meta Drops SAM 3D: The "Segment Anything" Revolution Goes 3D — Reconstructing Full Objects from a Single Image in Seconds

Meta Reality Labs launched SAM 3D on November 19, 2025 — a groundbreaking extension of the Segment Anything family that reconstructs textured, layout-aware 3D meshes from just one 2D photo. Featuring SAM 3D Objects for everyday items/scenes and SAM 3D Body for human pose/shape estimation, it crushes occlusion and clutter challenges with state-of-the-art fidelity. Fully open-sourced with checkpoints, code, and a new benchmark, early integrations already power Facebook Marketplace's "View in Room" — slashing 3D asset creation from hours to instants and democratizing AR/VR content.

NVIDIA Unleashes Nemotron 3 Series: Open-Source Powerhouse Delivers 4x Throughput, Rewriting the Rules for Agentic AI at Scale

NVIDIA launched the Nemotron 3 family of open models on December 15, 2025 — starting with Nemotron 3 Nano (30B params) available immediately, followed by Super and Ultra in early 2026. Powered by a breakthrough hybrid Mamba-Transformer MoE architecture, Nano achieves 4x higher token throughput than Nemotron 2 Nano while slashing reasoning tokens by up to 60%. With native 1M-token context, open weights, datasets (3T tokens), and RL libraries, this series arms developers for transparent, efficient multi-agent systems — early adopters like Palantir, Perplexity, and ServiceNow are already deploying it to crush costs and boost intelligence.

Zhipu AI Wraps Multimodal Open Source Week: Four Core Video Generation Technologies Fully Open-Sourced — Paving the Way for Next-Gen AI Filmmaking

On December 13, 2025, Zhipu AI concluded its "Multimodal Open Source Week" with a bang — open-sourcing four pivotal technologies powering advanced video generation: GLM-4.6V for visual understanding, AutoGLM for intelligent device control, GLM-ASR for high-fidelity speech recognition, and GLM-TTS for expressive speech synthesis. These modules, now freely available on GitHub and Hugging Face, enable end-to-end multimodal pipelines that fuse perception, reasoning, audio, and action — slashing barriers for developers building interactive video agents, embodied AI, and cinematic tools.

Mistral AI Unleashes Mistral 3: The Apache 2.0 Open-Source Powerhouse Family Crushing Proprietary Giants with Edge-to-Frontier Multimodal Might

Mistral AI launched the Mistral 3 series on December 2, 2025 — a blockbuster family of 10 fully open-weight multimodal models under the permissive Apache 2.0 license, spanning Ministral 3 (3B/8B/14B dense variants in base, instruct, and reasoning flavors) to the beastly Mistral Large 3 (675B total params MoE with 41B active). Optimized for everything from drones to datacenters, these models nail image understanding, non-English prowess, and SOTA efficiency, debuting at #2 on LMSYS Arena OSS non-reasoning while slashing token output by 10x in real-world chats. This full-line return to unrestricted commercial openness is a direct gut punch to closed ecosystems like OpenAI and Google.

SenseTime Unleashes NEO Architecture: The Native Multimodal Revolution That Fuses Vision and Language at the Core — Open-Sourced to Shatter Efficiency Barriers

SenseTime, in collaboration with Nanyang Technological University's S-Lab, launched the NEO architecture on December 5, 2025 — the world's first scalable, open-source native Vision-Language Model (VLM) framework that ditches modular "Frankenstein" designs for true bottom-up fusion. Featuring pixel-direct embedding, Native-RoPE for spatiotemporal harmony, and hybrid attention mechanisms, NEO achieves SOTA performance on benchmarks like MMMU and MMBench with 90% less training data than GPT-4V. The 2B and 9B models are now live on GitHub, with video/3D extensions slated for Q1 2026, igniting a paradigm shift toward edge-deployable multimodal brains.

Microsoft Unleashes Fara-7B: The 7B-Parameter Agentic SLM That Hijacks Your PC Like a Pro — Smarter Than GPT-4o at 1/100th the Size

Microsoft Research dropped Fara-7B on November 24, 2025 — its first agentic small language model (SLM) built for "computer use," turning screenshots into seamless mouse/keyboard symphonies. With just 7B parameters, this multimodal beast predicts thoughts, clicks, and scrolls with pinpoint accuracy, outperforming UI-TARS and rivaling GPT-4o on benchmarks like WebVoyager (85% success) while averaging 16 steps per task vs. 41 for giants. Open-sourced under MIT on Hugging Face and Azure Foundry, it's primed for on-device Copilot+ PC runs — early tests show devs automating web marathons in sandboxed bliss, no cloud crutches needed.

Meta Drops SAM 3D: The SAM Evolution That Turns Single Images into Photorealistic 3D Worlds, Crushing Occlusion Nightmares for AR and Robotics

Meta AI unveiled SAM 3D on November 19, 2025 — a groundbreaking extension of the Segment Anything Model family that reconstructs full 3D geometry, textures, and poses from just one everyday photo. Featuring dual powerhouses SAM 3D Objects (for scenes and clutter-crushing object meshes) and SAM 3D Body (for human shape estimation), it leverages a massive human-feedback data engine to outperform rivals on real-world benchmarks. With open checkpoints, inference code, and a new eval suite now live on GitHub and Hugging Face, SAM 3D slashes 3D capture time from hours to seconds — igniting a firestorm in AR/VR, robotics, and VFX where traditional multi-view setups just got obsoleted.