Zhipu AI Wraps Multimodal Open Source Week: Four Core Video Generation Technologies Fully Open-Sourced — Paving the Way for Next-Gen AI Filmmaking

On December 13, 2025, Zhipu AI concluded its "Multimodal Open Source Week" with a bang — open-sourcing four pivotal technologies powering advanced video generation: GLM-4.6V for visual understanding, AutoGLM for intelligent device control, GLM-ASR for high-fidelity speech recognition, and GLM-TTS for expressive speech synthesis. These modules, now freely available on GitHub and Hugging Face, enable end-to-end multimodal pipelines that fuse perception, reasoning, audio, and action — slashing barriers for developers building interactive video agents, embodied AI, and cinematic tools.

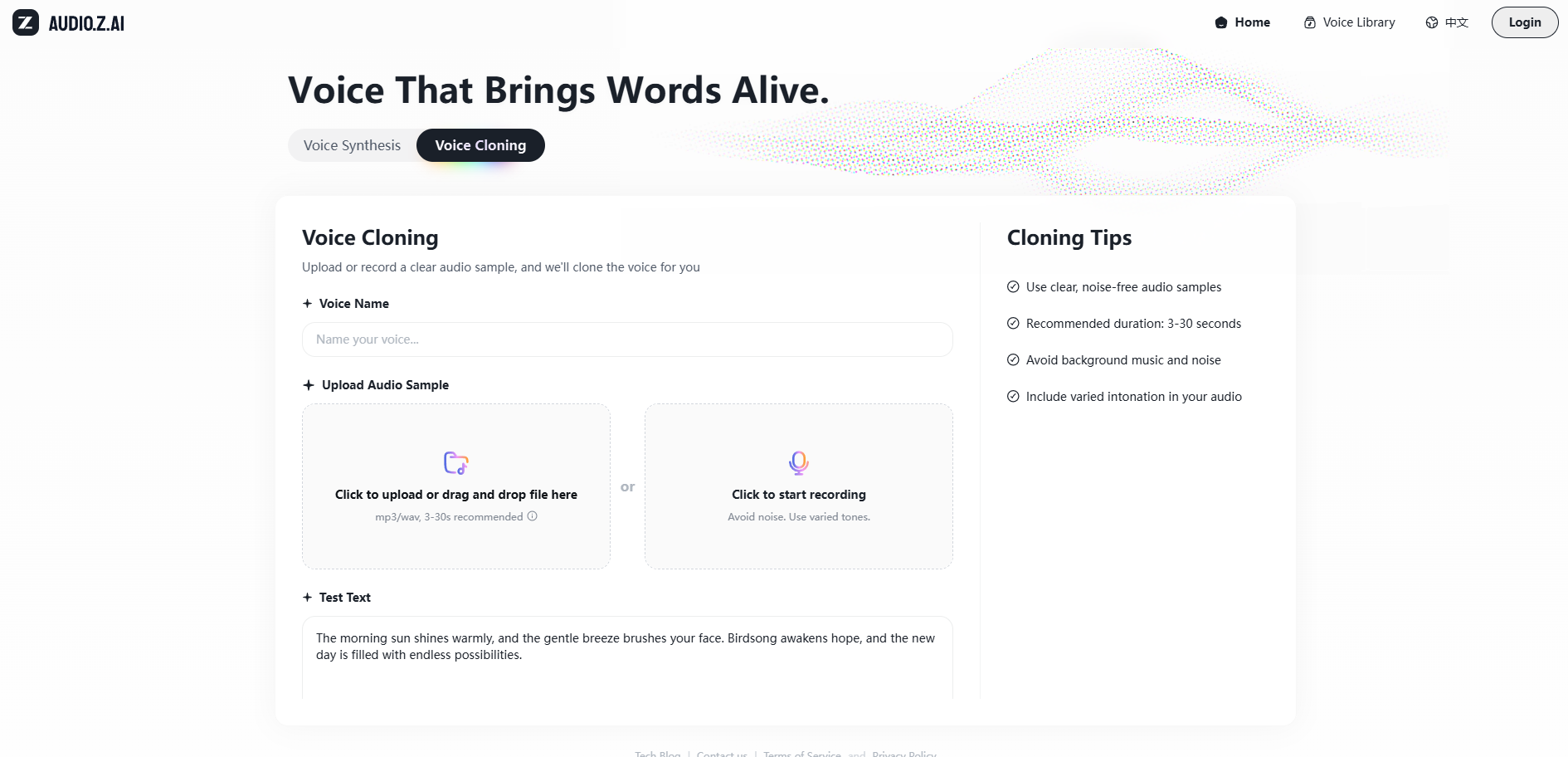

Zhipu AI Open-Sources GLM-TTS: Multi-Reward RL-Powered TTS That Clones Voices in 3 Seconds with Emotional Depth

Zhipu AI released and open-sourced GLM-TTS on December 11, 2025 — an industrial-grade text-to-speech system that clones any voice from just 3 seconds of audio, delivering natural prosody, emotional expressiveness, and precise pronunciation. Powered by a two-stage architecture and multi-reward reinforcement learning (GRPO framework), it hits open-source SOTA on character error rate (0.89%) and emotional fidelity using only 100K hours of training data. Weights are now available on GitHub and Hugging Face, with seamless integration into Zhipu's ecosystem for audiobooks, assistants, and dubbing.