Zhipu AI Open-Sources GLM-TTS: Multi-Reward RL-Powered TTS That Clones Voices in 3 Seconds with Emotional Depth

Category: Tool Dynamics

Excerpt:

Zhipu AI released and open-sourced GLM-TTS on December 11, 2025 — an industrial-grade text-to-speech system that clones any voice from just 3 seconds of audio, delivering natural prosody, emotional expressiveness, and precise pronunciation. Powered by a two-stage architecture and multi-reward reinforcement learning (GRPO framework), it hits open-source SOTA on character error rate (0.89%) and emotional fidelity using only 100K hours of training data. Weights are now available on GitHub and Hugging Face, with seamless integration into Zhipu's ecosystem for audiobooks, assistants, and dubbing.

🗣️ Zhipu AI’s GLM-TTS: The Emotion-Driven Contender Redefining Open-Source TTS

The open-source text-to-speech (TTS) race just gained a game-changing contender from China — and it’s speaking with genuine emotion. Zhipu AI’s GLM-TTS isn’t another flat-voiced synthesizer; it’s a zero-shot powerhouse that captures timbre, speech habits, and feelings from tiny audio snippets, turning text into speech that rivals human performers.

Built on a clever two-stage pipeline — a large language model (LLM) for semantic token generation + Flow Matching for high-fidelity waveforms — it tackles longstanding TTS pain points head-on: polyphone ambiguity, rare character pronunciations, and robotic intonation. Its secret weapon? A multi-reward reinforcement learning (RL) framework that blends character accuracy (CER), speaker similarity, emotion scoring, and paralinguistic cues (laughs, sighs) to evolve beyond supervised learning limits, dodging reward hacking while boosting natural expressiveness.

🔑 Core Breakthroughs That Set It Apart

| Feature | Details |

|---|---|

| 3-Second Zero-Shot Cloning | Upload a short 3–10 second voice clip → instant voice replica with 95%+ similarity. Supports dialects, styles, and accents — no fine-tuning required. |

| Multi-Reward RL Magic | Powered by Group Relative Policy Optimization (GRPO), it optimizes across multiple dimensions (rhythm, emotion, naturalness) to outperform peers. Achieves open SOTA on Seed-TTS-Eval with a CER of just 0.89%. |

| Precise Phoneme Control | Inline pronunciation overrides fix tricky words (e.g., polyphones, technical terms), making it ideal for educational content and professional reads. |

| Efficiency at Scale | Trained on just 100K hours of data (far less than commercial giants), with pretraining completed in 4 days on a single machine; RL fine-tuning and LoRA tweaks take only 1 day. |

🖥️ Interface & Deployment: Accessible for All

🚀 Plug-and-Play Workflows

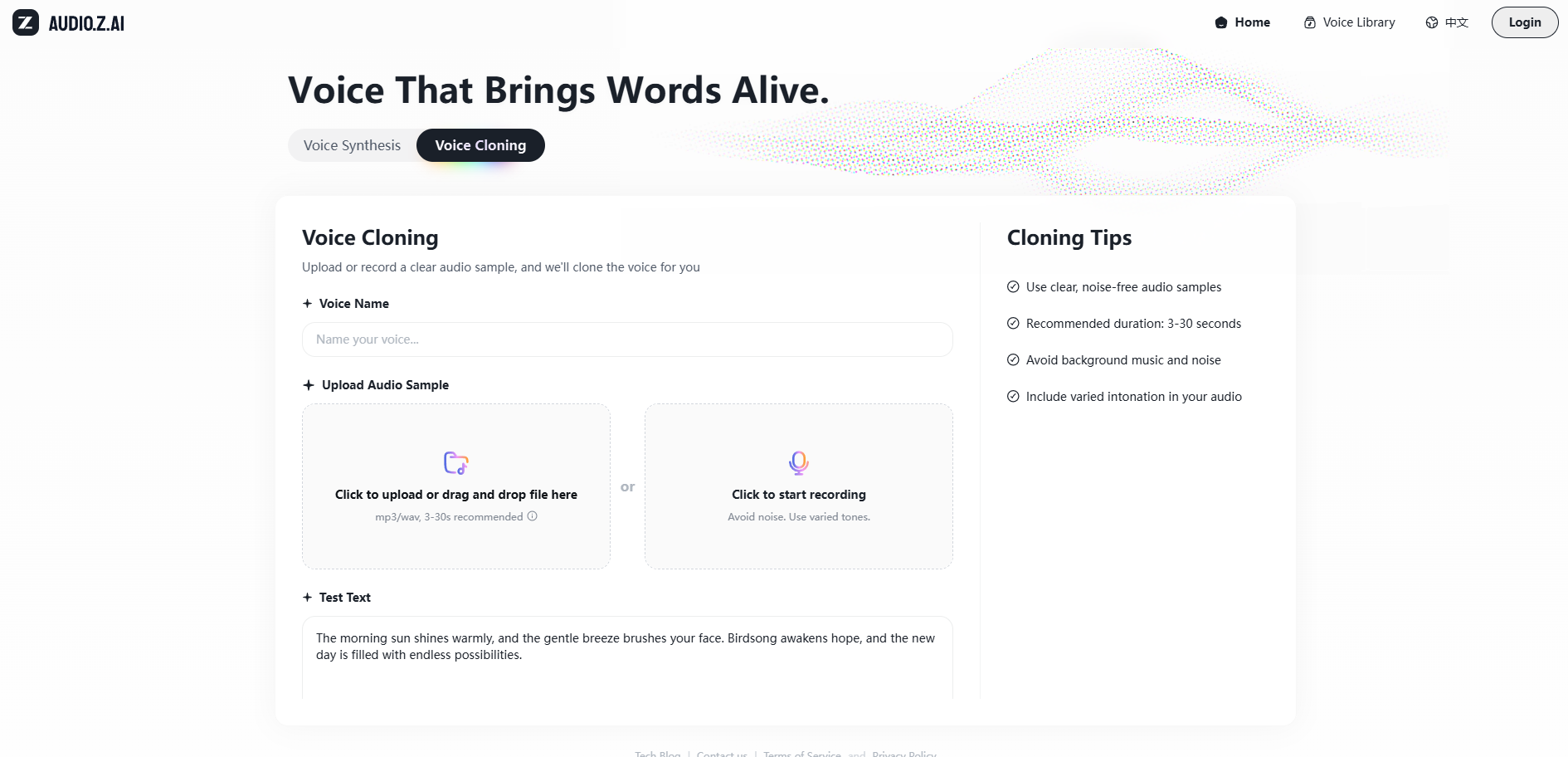

- Online Playgrounds: Test instantly via the Gradio demo or Z.ai platform — paste text, upload a voice clip, tweak speed/emotion sliders, and stream outputs in real time.

- Open-Source Repos: Hugging Face and GitHub hosts include full inference scripts, streaming support, and step-by-step fine-tuning guides for developers.

- Enterprise-Grade API: Access via BigModel.cn with pricing 1/3 of industry peers, plus enterprise features like VPC isolation for secure deployment.

✨ Early Impact & Community Reception

- Blind Test Dominance: Crowned the most natural open-source TTS for Chinese in blind evaluations, with users praising its “human-like expressiveness.”

- Developer Love: Devs rave about audiobook production pipelines slashing hours to minutes, AI assistants sounding “alive,” and dubbing workflows eliminating manual edits.

- Rapid Community Growth: Community forks are already emerging to extend multilingual support beyond its native Chinese + English mixed-text capability.

GLM-TTS proves reinforcement learning isn’t just for chatbots — it’s the key to unlocking truly expressive open-source speech. By democratizing emotional, controllable TTS with minimal data requirements and low costs, Zhipu AI is flooding the ecosystem with tools for lifelike AI agents, immersive content, and accessible audio solutions.

The future of synthetic voice? It’s not just crystal clear — it’s finally feeling real.